Today we’re revisiting a mid-range GPU battle between the Radeon RX 570 4GB and GeForce GTX 1060 3GB. It’s been a little over a year since we compared these two head to head in an extensive 28 game battle, so we thought it was about time to take another look now that GPU prices seem to be coming back down to more reasonable prices.

Since the original comparison we’ve received multiple driver and game updates as well as quite a few new games which we’ve added to the benchmark list. Of the 27 games we'll be testing, just half a dozen of them were featured in last year's battle.

Last time the Radeon RX 570 was a mere 2% slower on average, so a very even fight. Currently the RX 570 costs ~$10 more than the 3GB version of the GTX 1060 -- even though its reference cost is $170, while the GTX 1060 should be about $200. Today though the 1060 starts at $230 while the 570 starts at $240, so like all graphics cards they were better priced a year ago.

Representing the green team is the MSI GeForce GTX 1060 Gaming X 3G and for the red team the MSI Radeon RX 570 Gaming X 4G. Although both models are pretty heavily overclocked out of the box, we’re still going to squeeze every last bit of performance from them with a custom overclock.w

Stock the RX 570 Gaming X comes clocked at 1281 MHz for the core and 1750 MHz for the GDDR5 memory. We were only able to increase the core clock to 1435 MHz, a 12% core overclock and the memory to 1900 MHz, a 9% overclock.

As for the GTX 1060 Gaming X 3G, it comes with a base core clock of 1569 MHz for and 2002 MHz for the GDDR5 memory. We were able to hit 1770 MHz for the core and this resulted in a boost frequency of about 2150 MHz depending on load. The memory also reached 2275 MHz, so a 13% core overclock and a 14% memory overclock.

As mentioned earlier we have 27 games in total, all of which have been tested at 1080p using both the stock and overclocked configurations. As usual our Corsair GPU test rig built in the Crystal Series 570X has been used and inside we have an i7-8700K clocked at 5 GHz with 32GB of DDR4-3200 memory. We realize this hardware configuration is overkill for these GPUs, but the idea here is to remove any potential system bottlenecks that might mask GPU throughput. Now let’s get to the good stuff…

Benchmarks

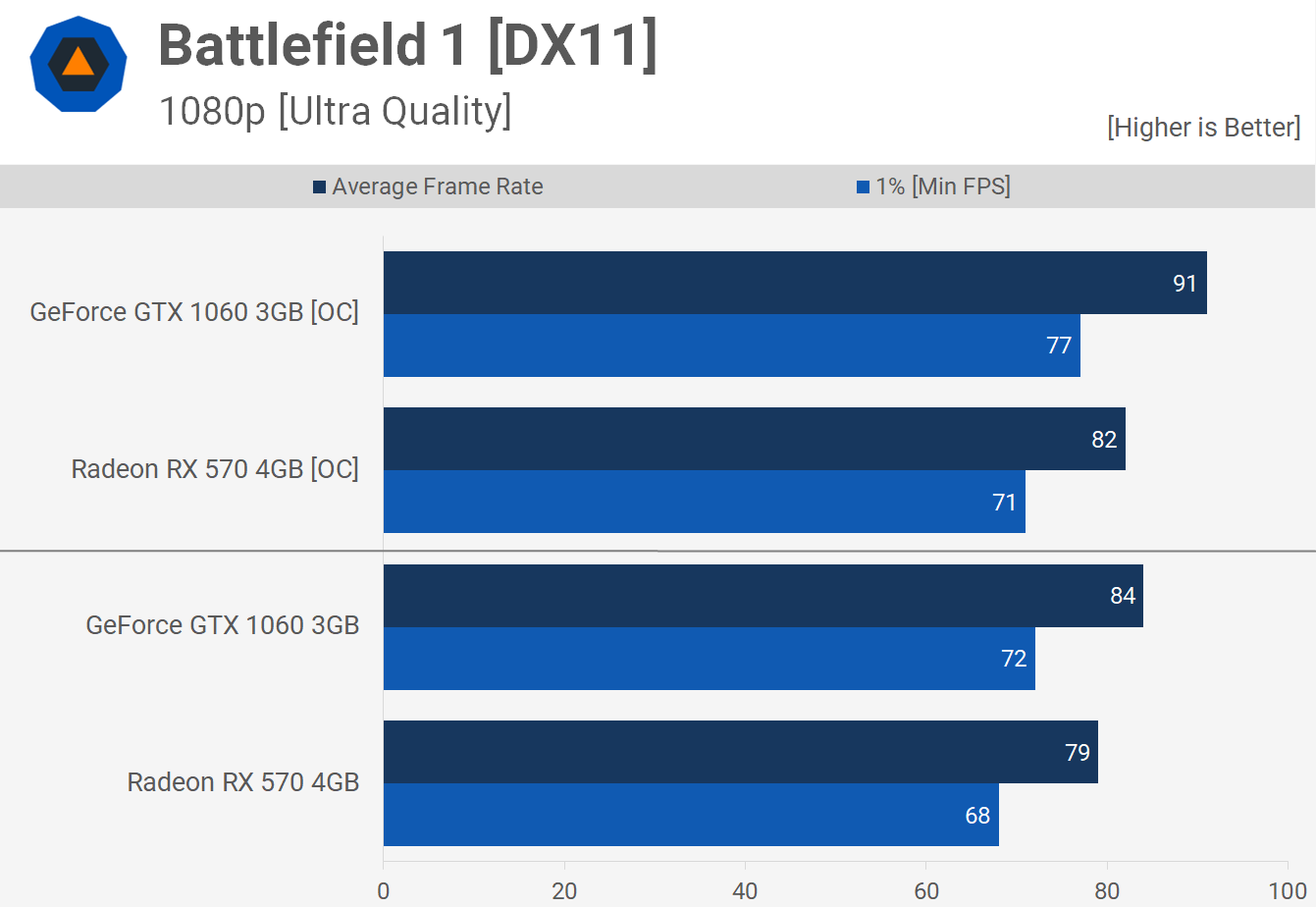

First up we have the Battlefield 1 results and here the GTX 1060 was 6% faster out of the box and 11% faster once overclocked. So a clear win here for Nvidia but it has to be said the RX 570 still puts on a show, delivering well over 60 fps at 1080p using the maximum in-game quality settings.

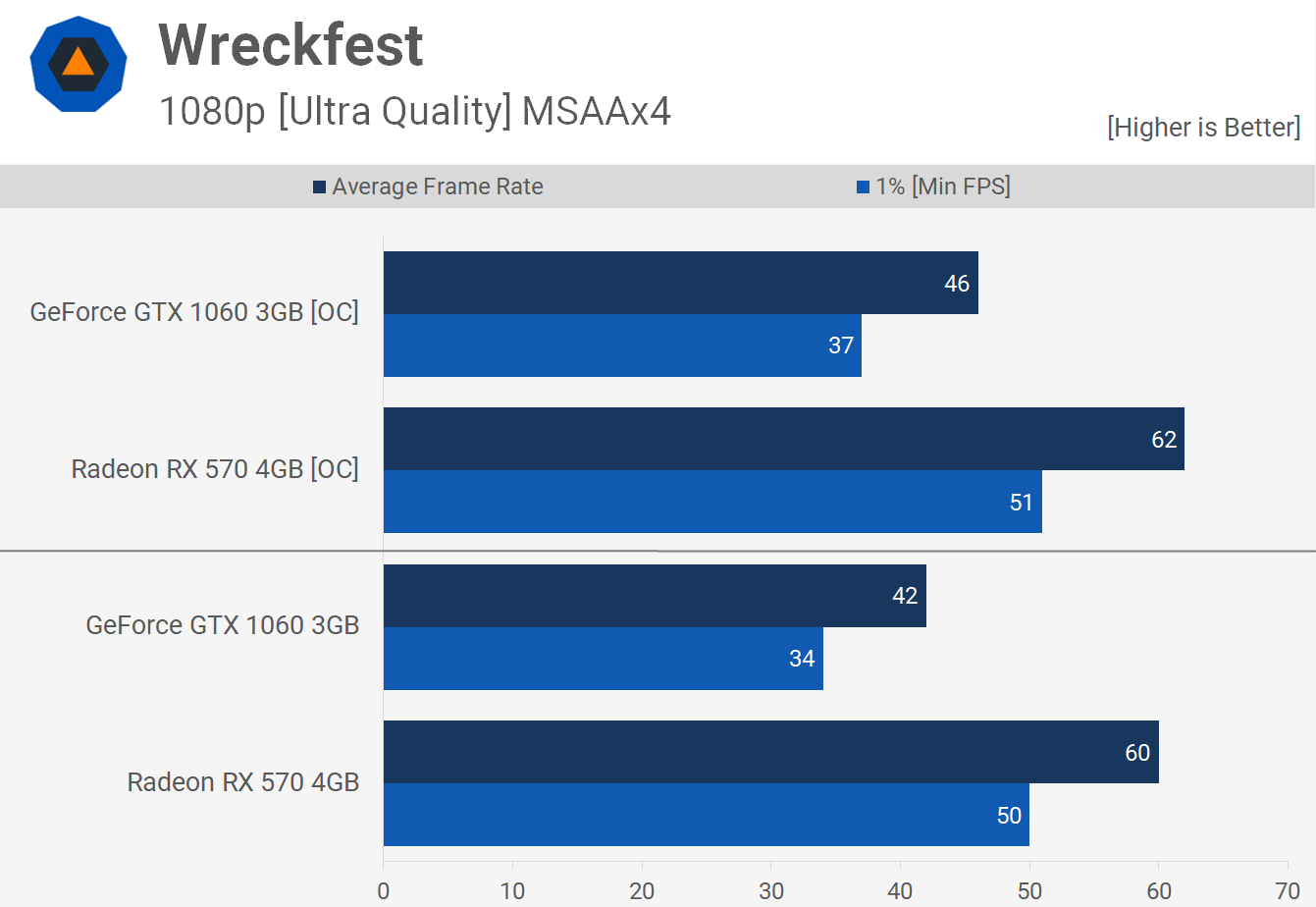

Unfortunately Nvidia still hasn’t addressed their underwhelming Wreckfest performance, allowing AMD to rear end them in brutal fashion as the RX 570 provides up to 47% more performance. More critically the RX 560 averaged 60 fps out of the box, whereas the GTX 1060 3GB struggled to break the 40 fps barrier.

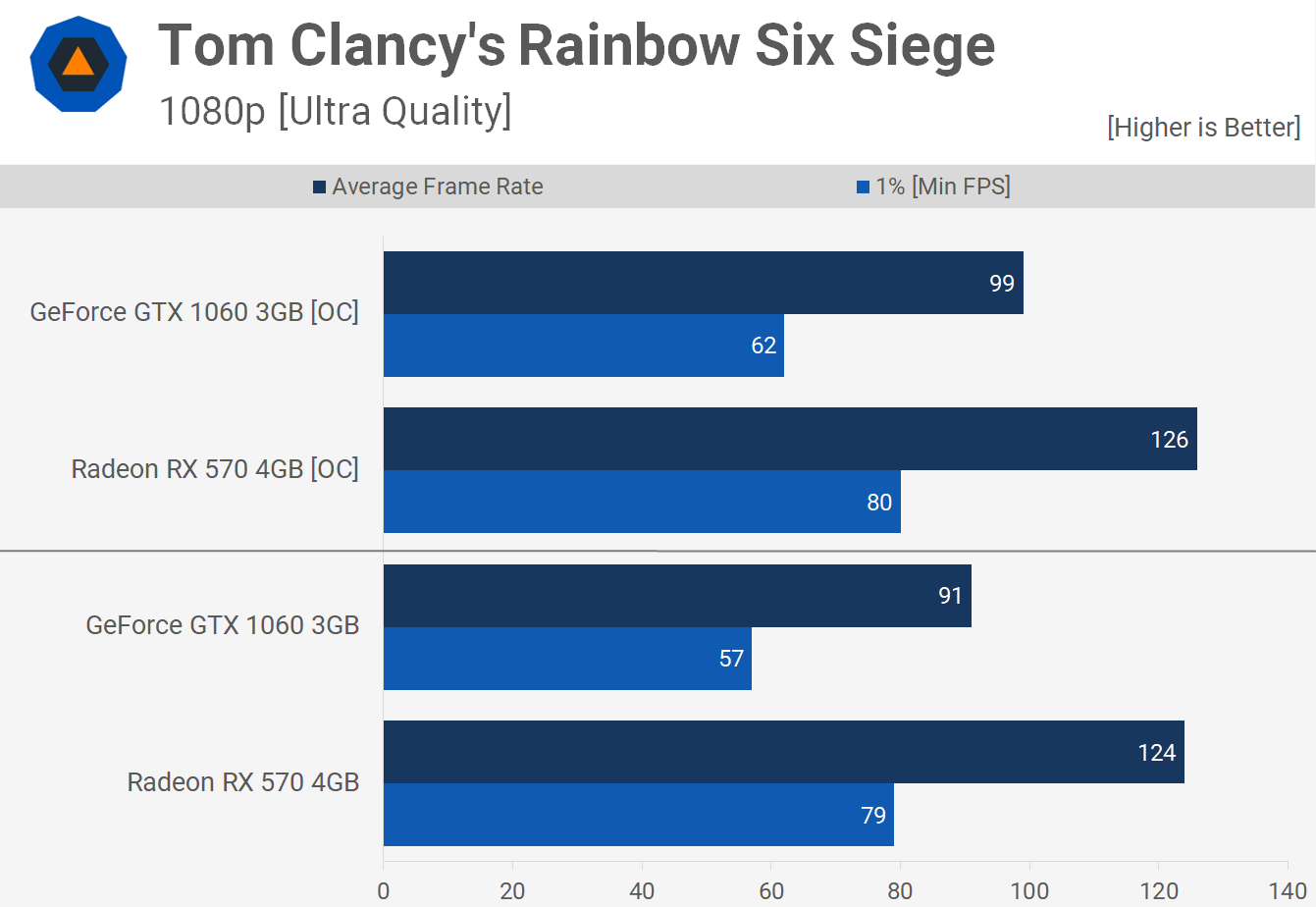

Tom Clancy's Rainbow Six Siege is another title that really favours the red team, here the RX 570 beat the GTX 1060 by a 36% margin out of the box though Nvidia was able to reduce that to a 27% margin in favour of the RX 570 once both GPUs were overclocked.

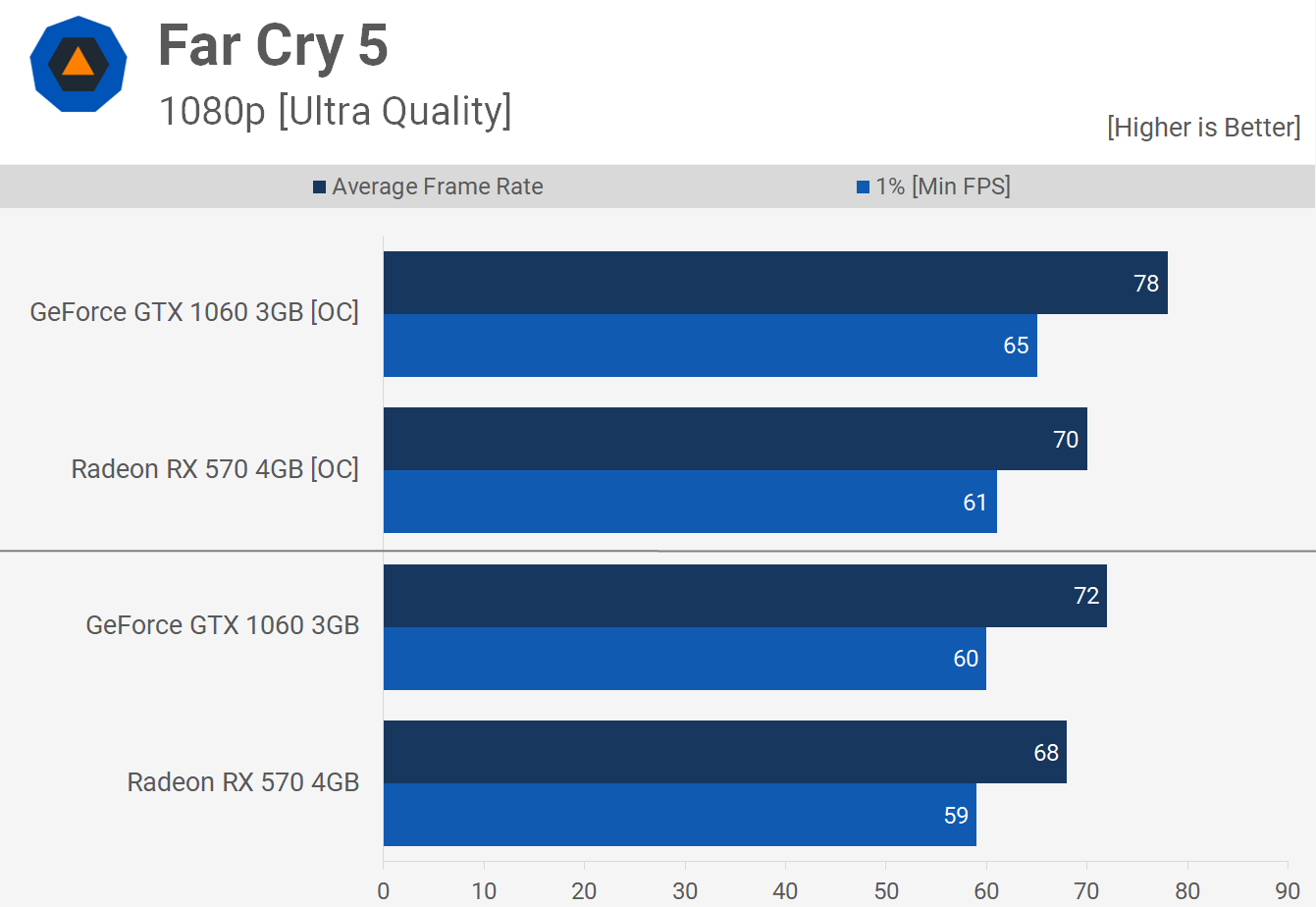

Although Far Cry 5 is an AMD sponsored title we saw little to no bias upon release, both Radeon and GeForce GPUs performed as expected. Here we see the GTX 1060 3GB is slightly faster than the RX 570 out of the box and 11% faster once overclocked.

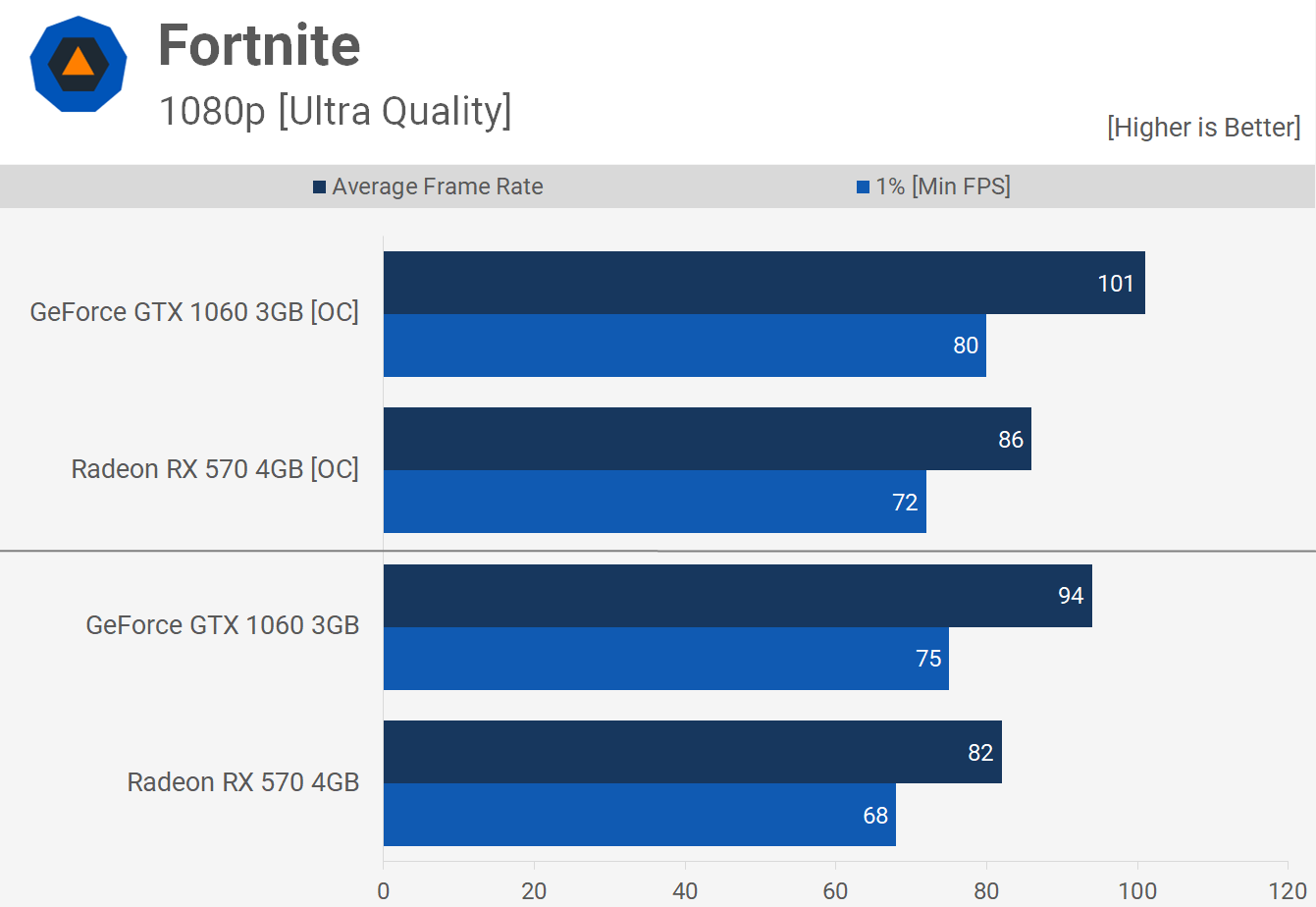

Fortnite is built upon the Unreal Engine 4 and therefore has a natural tendency to favour hardware from the green team. Here we see the GTX 1060 providing 15% more performance stock and 17% more once overclocked. Still it’s worth keeping in mind that we are using the maximum in game quality settings at 1080p and still seeing well over 60 fps at all times using the RX 570.

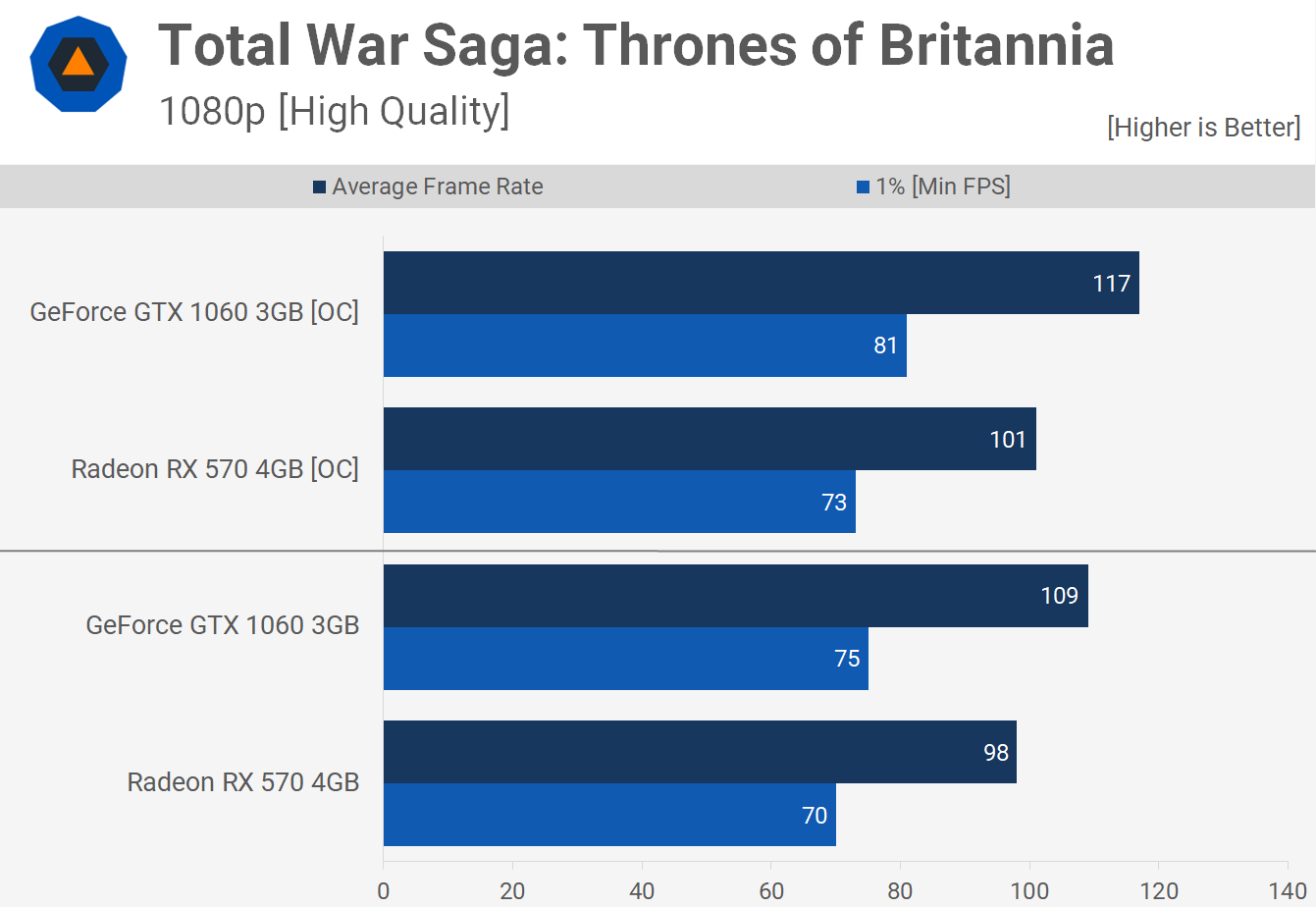

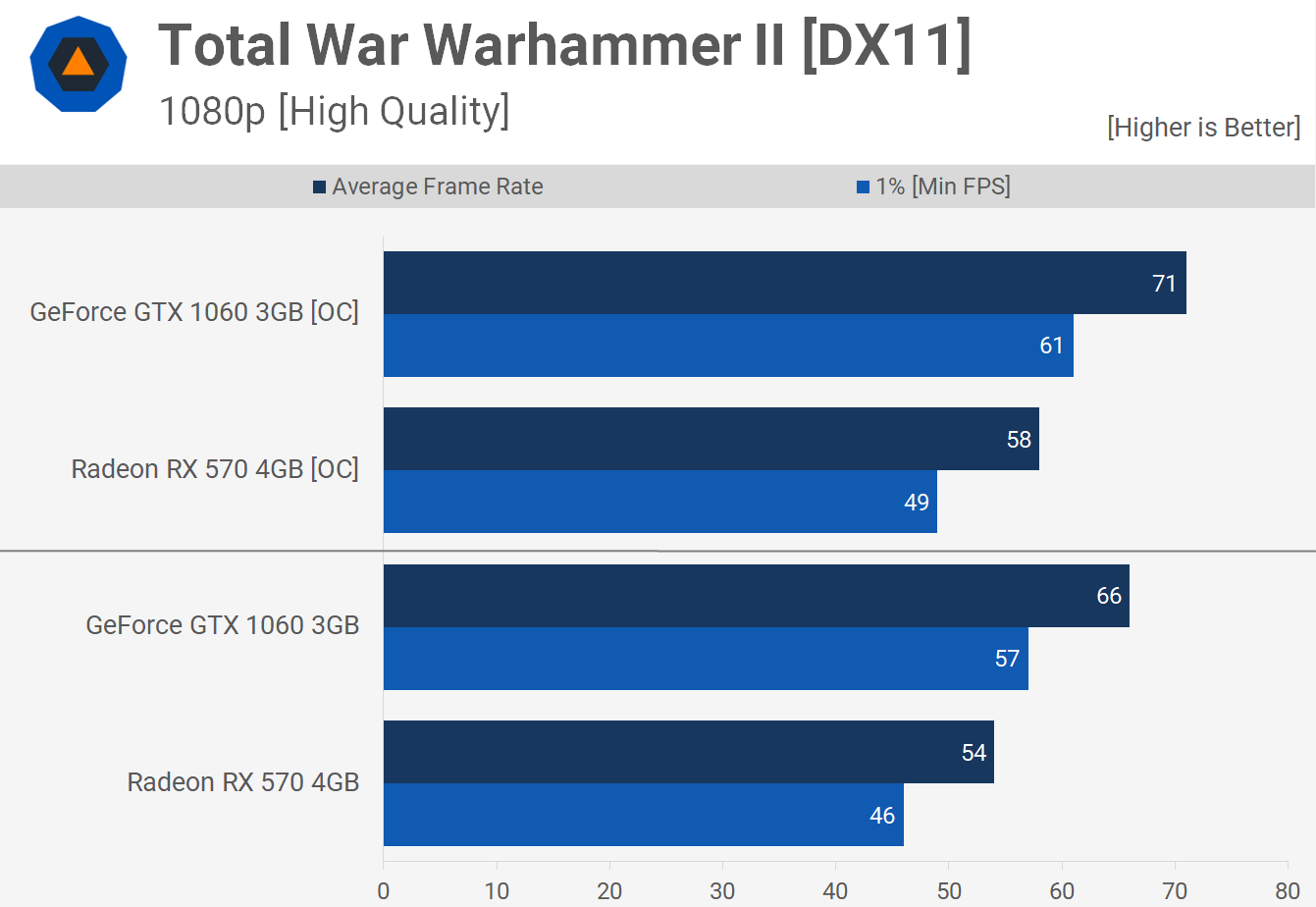

Total War Saga: Thrones of Britannia is a newly released title that plays well on both AMD and Nvidia hardware. That said the GTX 1060 3GB has a reasonable performance advantage as it provided 11% more frames out of the box and 16% more once overclocked. Still there was no shortage of frames at 1080p using either GPU.

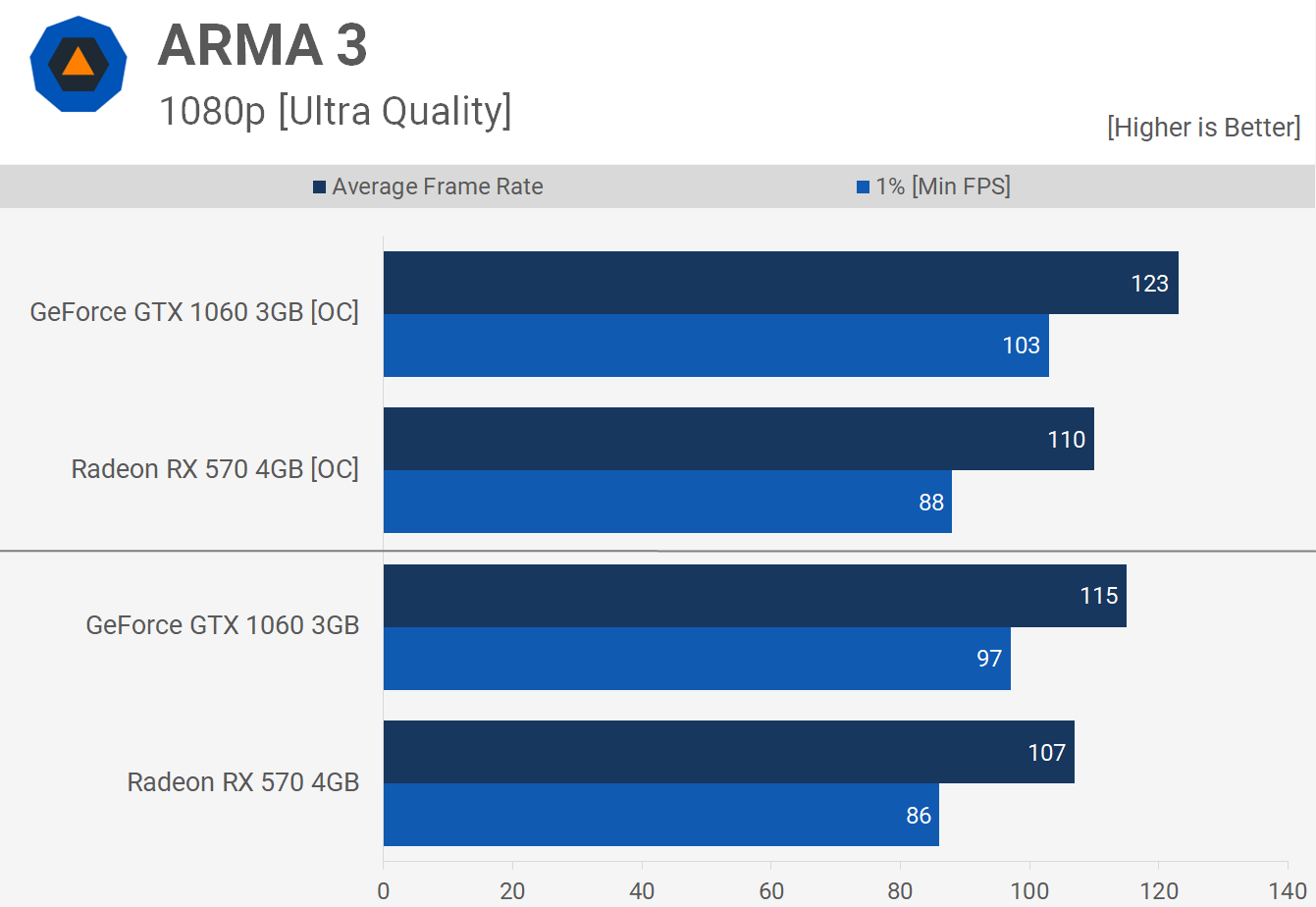

AMD’s ARMA 3 performance has improved out of sight in the past year and although the RX 570 was slower than the GTX 1060 3GB, it was only 7% slower out of the box and provided well over 60 fps at all times.

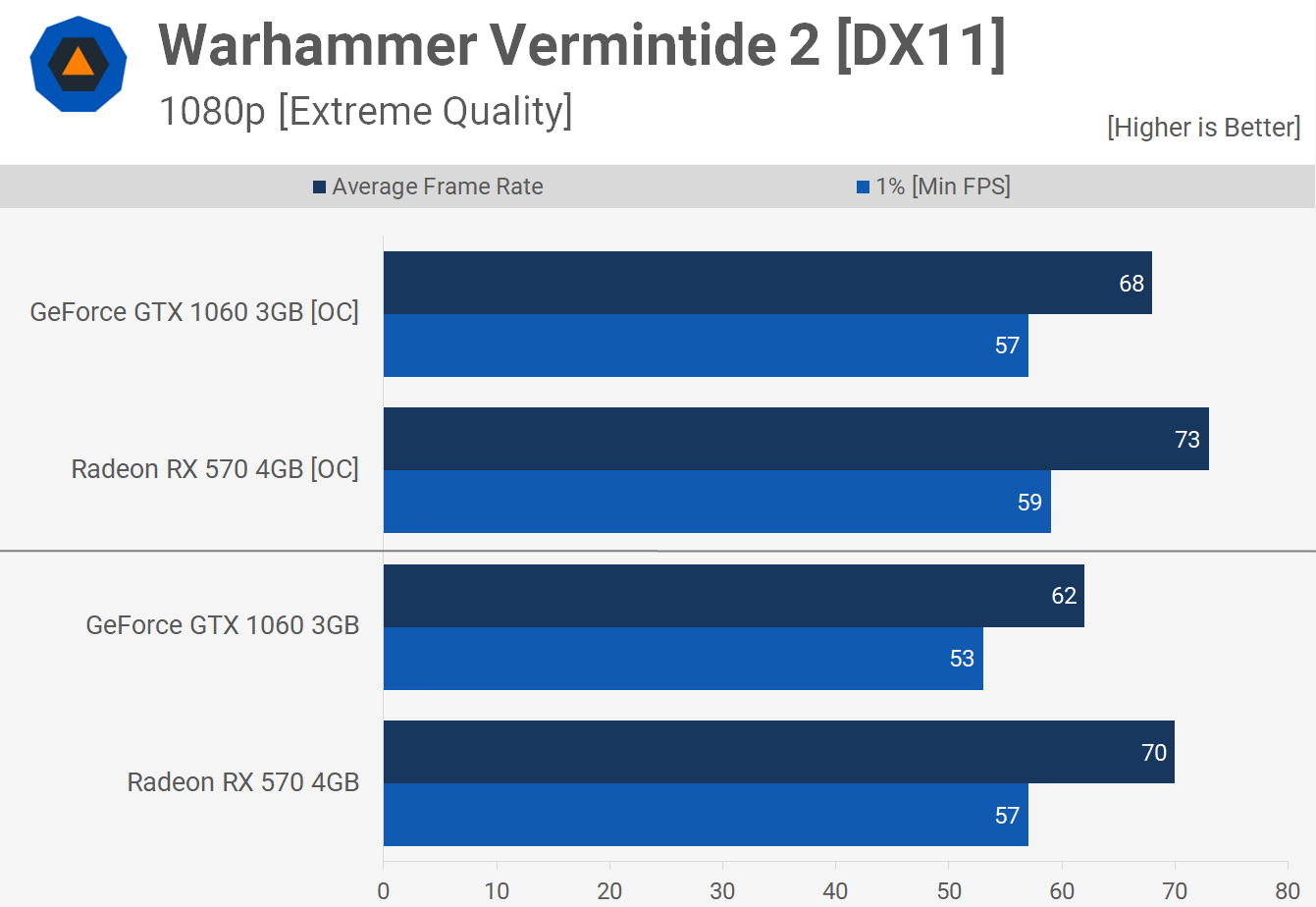

The last game we’re going to look at is Warhammer Vermintide 2 and this is another title that plays nicely with AMD hardware. Here the RX 570 spat out 70 fps on average out of the box and that made it 13% faster than the GTX 1060 3GB.

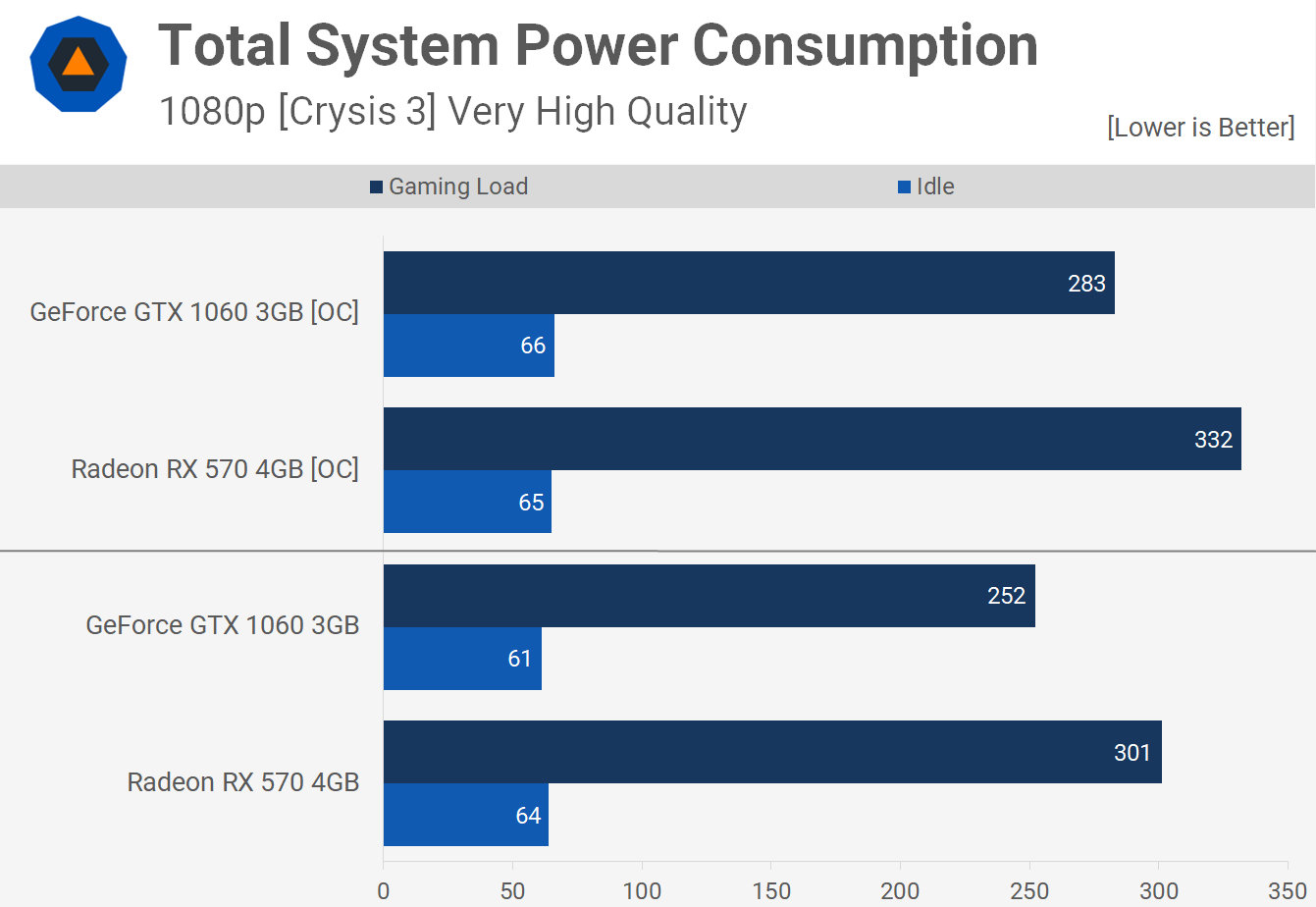

When it comes to power consumption the RX 570 does suck down a little more than the GTX 1060. It’s at a disadvantage here being that the die is 16% larger so not that surprising we see a 19% increase in total system consumption. Still even when overclocked we’re only seeing a system consumption of 332 watts and that includes a Core i7-8700K clocked at 5 GHz, so with a lower end CPU it’s unlikely you’ll see total system consumption climb above 300 watts.

Summary

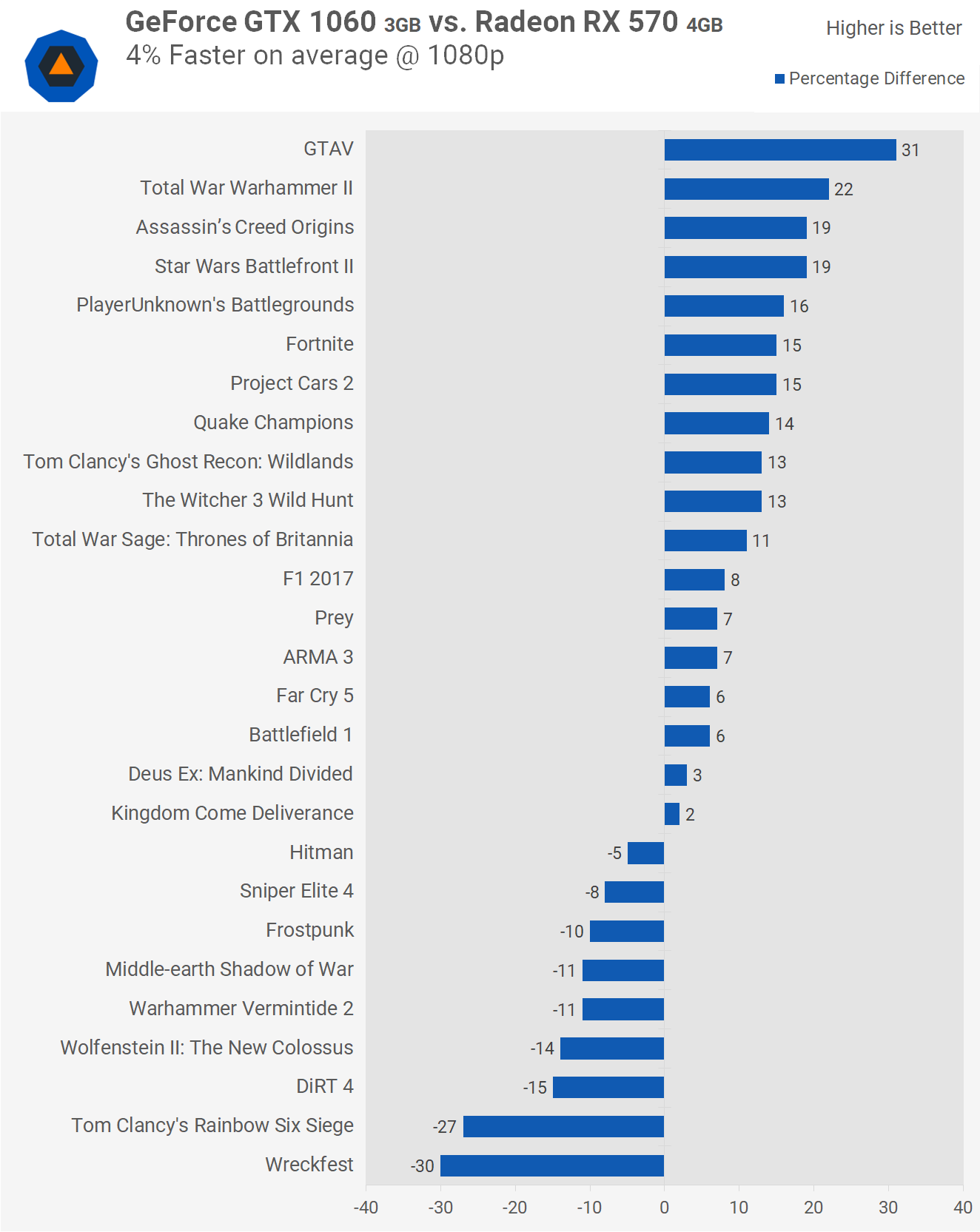

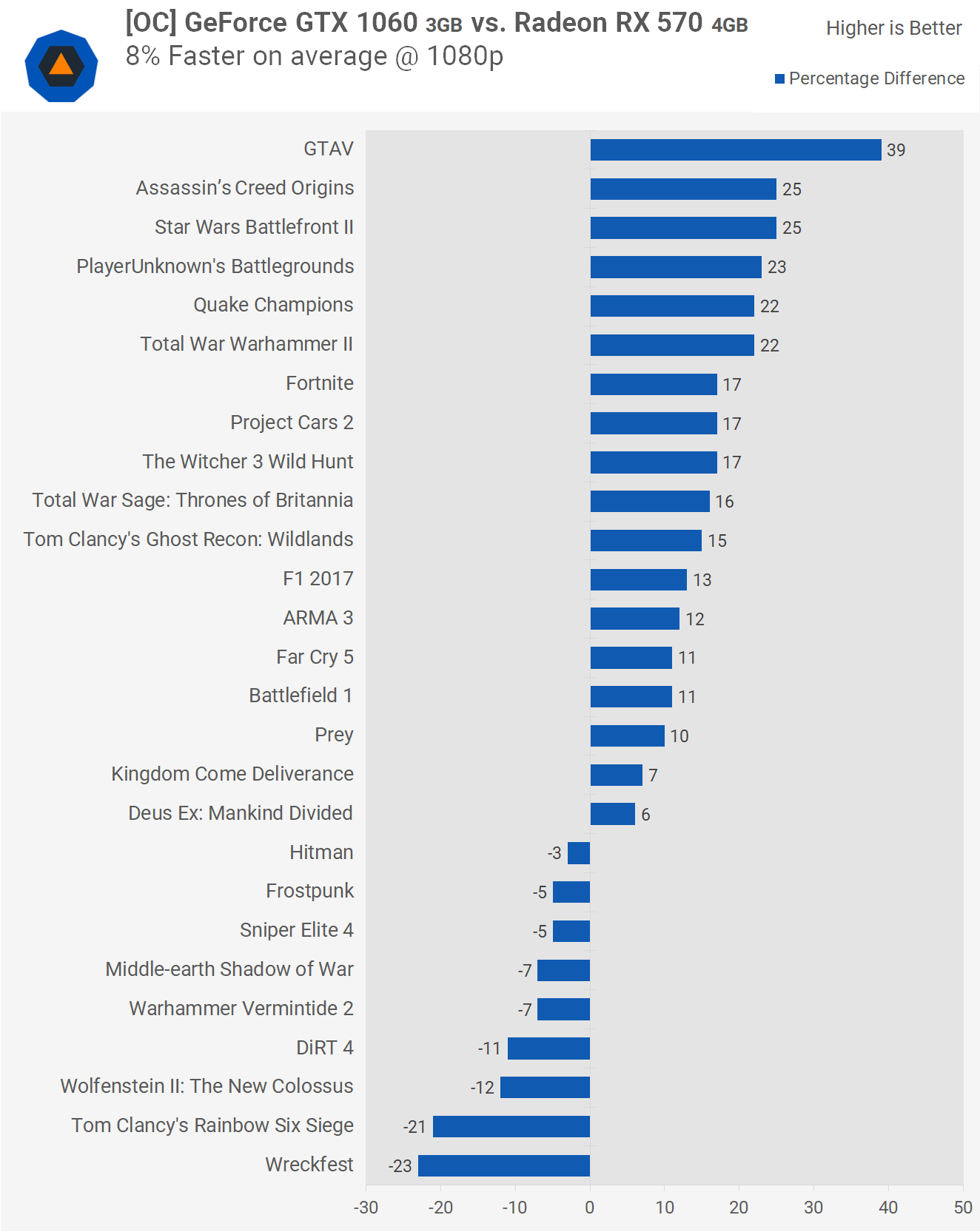

Now let’s get the full picture by looking at the results across all 27 games. Stock out of the box the GTX 1060 3GB was on average 4% faster than the RX 570. Not a big margin, but it has grown by a few percent since we last compared the two, so the 3GB 1060 is still kicking along okay at 1080p.

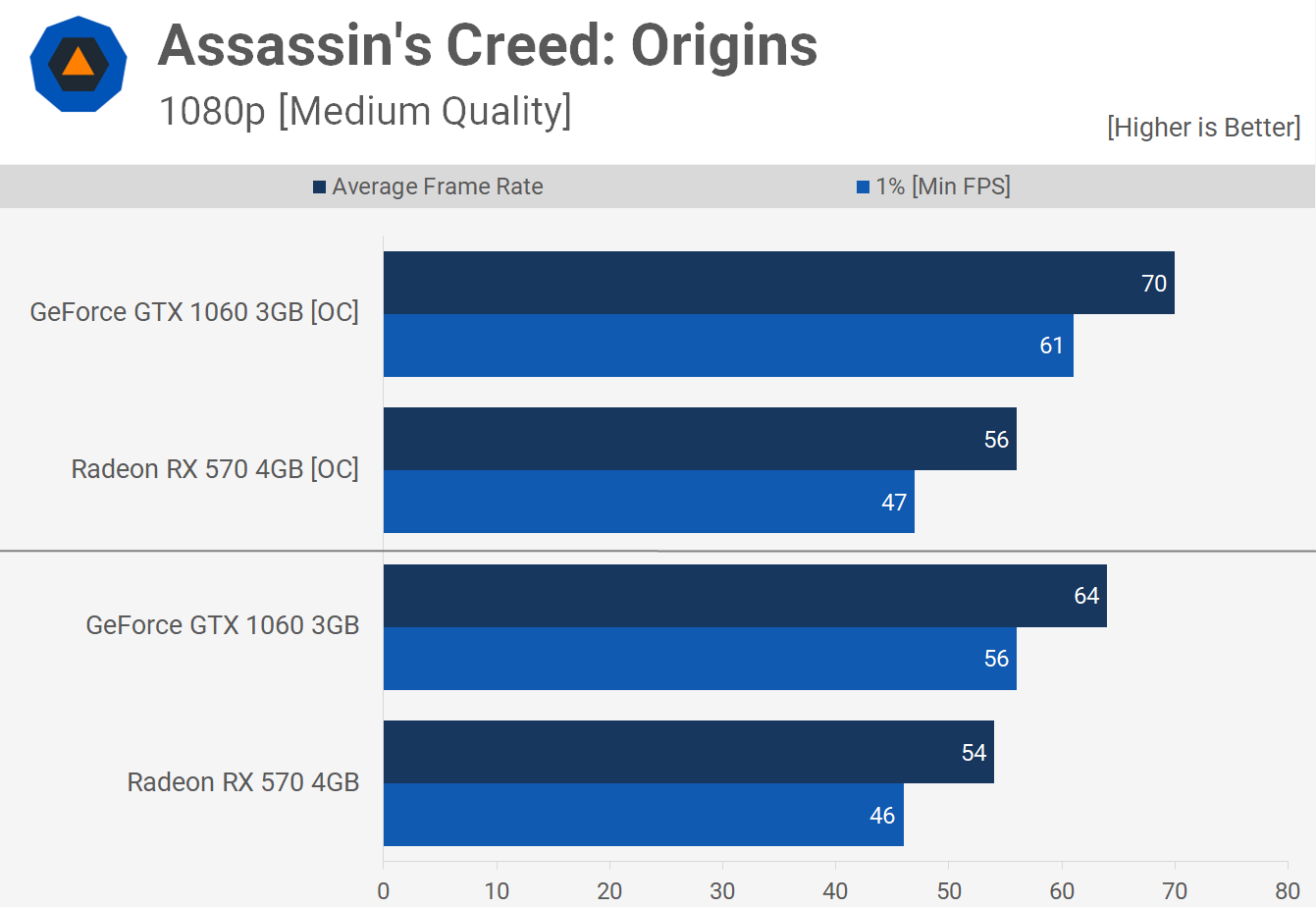

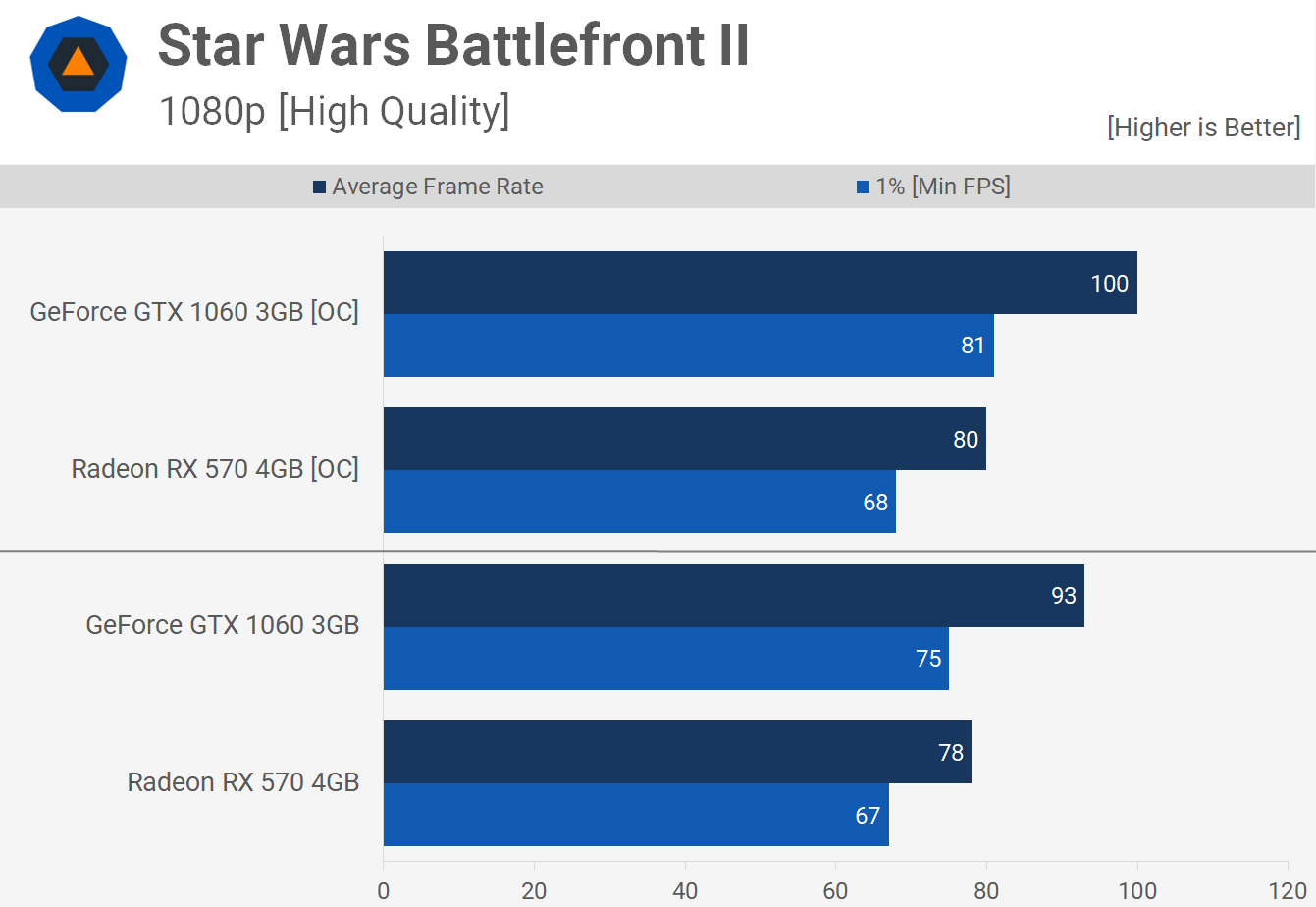

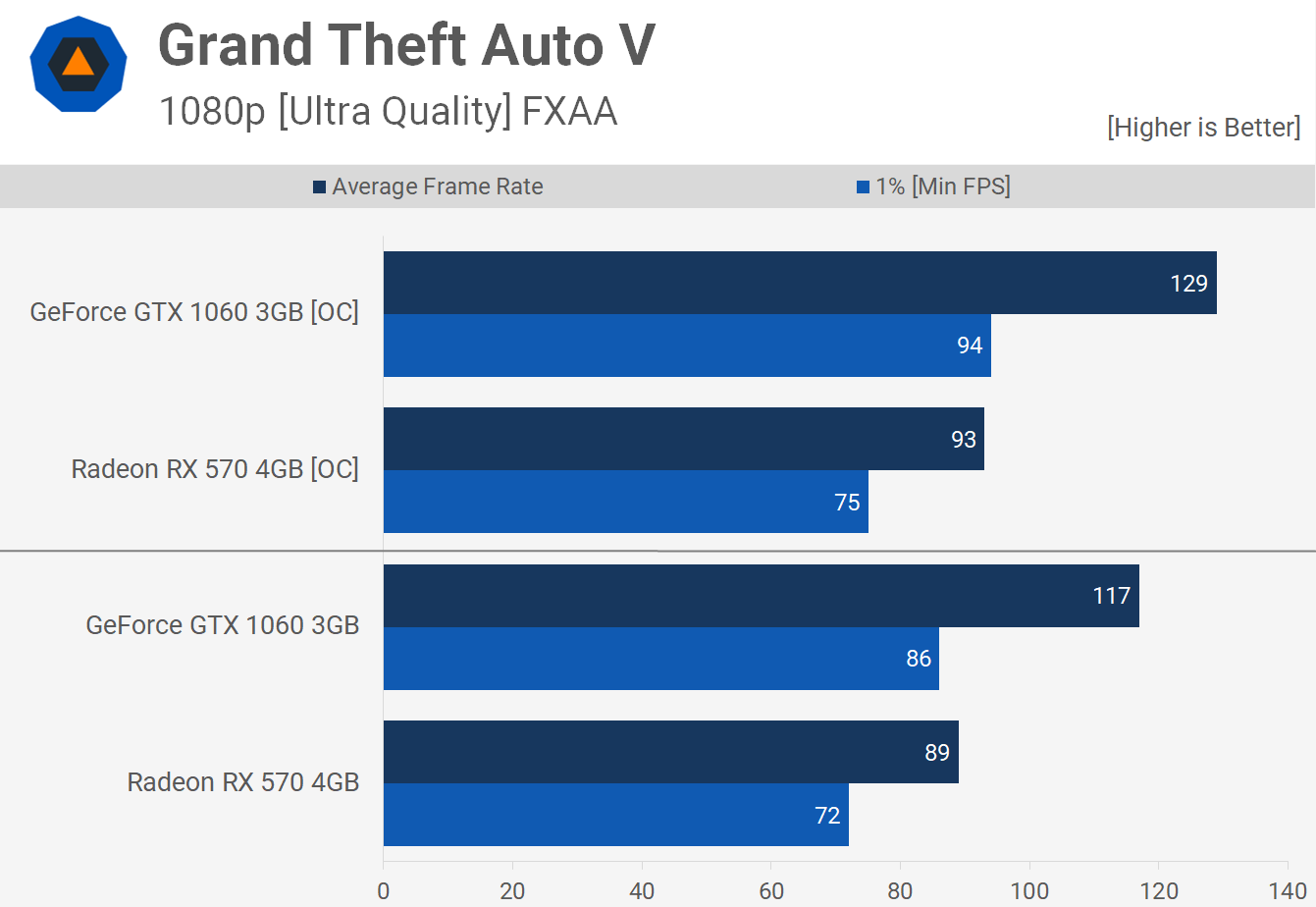

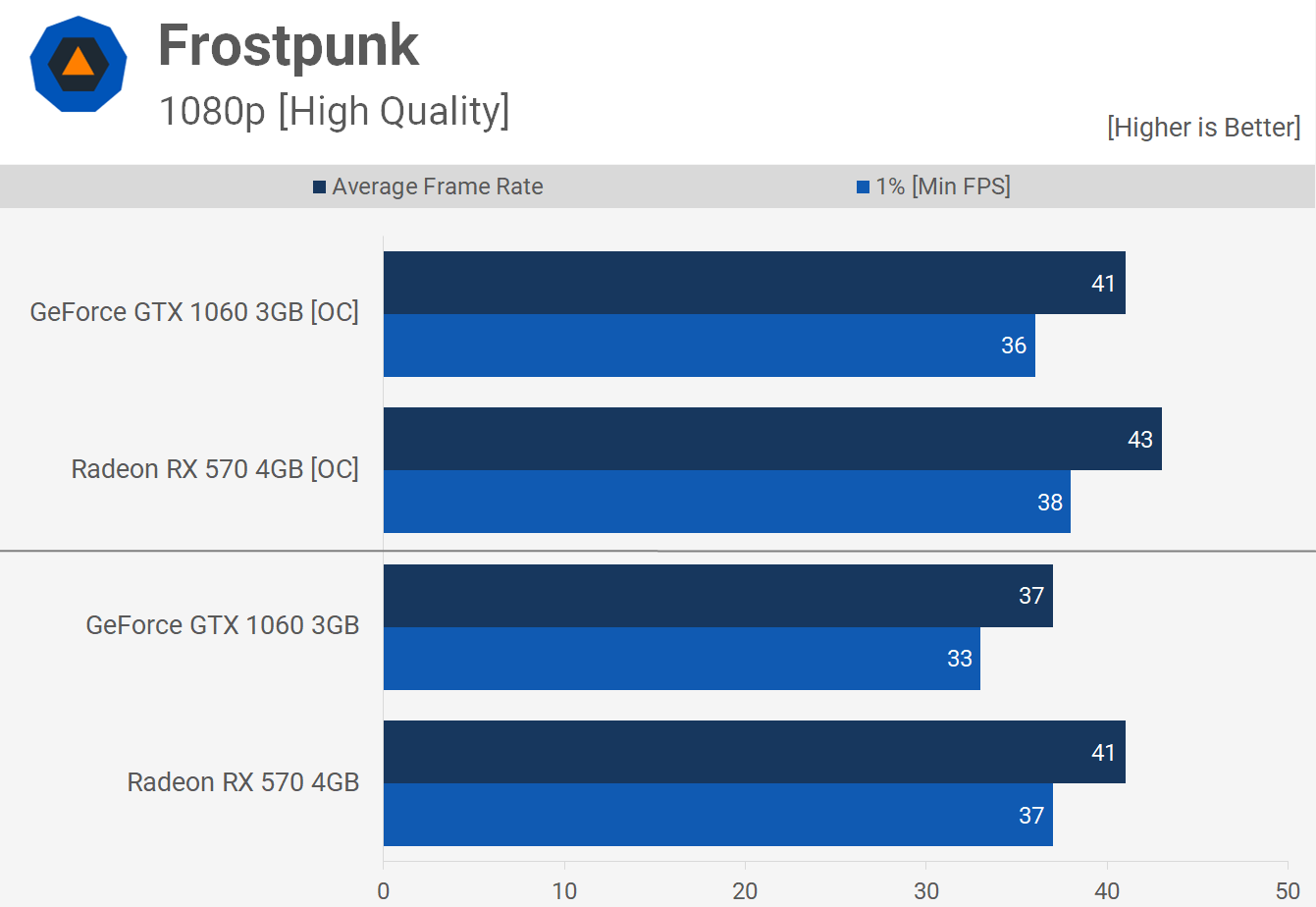

The 1060 enjoyed solid wins in GTA V, Warhammer II, Assassin’s Creed Origins and Battlefront II. It was less impressive when testing with Wreckfest and Rainbow Six Siege.

Then with both graphics cards overclocked the GTX 1060 3GB extended its lead out to 8% and was now able to deliver considerably more performance in 6 of the titles tested, providing 20% more frames. Loses in titles such as Wreckfest and Rainbow Six Siege were also reduced. So if you plan on manually overclocking your graphics card the GTX 1060 is very attractive and because it consumes less power it also runs a little cooler as well, though temperatures will vary quite a bit from one model to the next so we won’t go into that data here.

Closing Thoughts

So which one of these GPUs should you buy? Depending on the games you play, one model might be a better choice than the other. Overall though the experience was very similar in almost every title tested. Last time I said there was no right or wrong choice here, they’re just too close to call it, so get whichever is less expensive.

Having said all that, I personally gravitate towards the GTX 1060 3GB for a few reasons. Although I’m not happy about the 3GB naming -- it’s not merely a GTX 1060 6GB with half the memory, it’s missing an SM unit taking the CUDA core count from 1280 down to 1152 -- so in spite of having the same name, it actually features 10% fewer cores. But that doesn’t change the fact that the 3GB 1060 is actually still a really good value product.

Right now the GeForce is also slightly cheaper than the RX 570 while offering slightly better performance. So if I had a little over $200 to spend on a graphics card, that’s what I’d be getting right now.

If you can afford to pony up the extra $50 or so for the fully fledged 6GB model then by all means do so, but at 1080p there’s no chance you’re going to see 20% more performance, so in terms of cost per frame the 3GB version offers much more value.

Finally, if you want to take advantage of adaptive sync technology on a budget, then the RX 570 makes more sense as FreeSync is more affordable, but the experience will vary from display to display so make sure you do your research there.

Shopping Shortcuts:

LAPTOP

Dell XPS 15 2-in-1 Review: Kaby Lake-G Inside

INDUSTRY MOVES

Microsoft & Walmart partner to take on mutual rival Amazon

GAMING CULTURE

Ubisoft implements auto-ban for 'toxic' players in Rainbow Six Siege

OPINION

California data privacy law highlights frustration with tech industry

SCIENCE

Astronomers discover a dozen new moons orbiting Jupiter and one of them is an oddity

User Comments: 26

Got something to say? Post a comment

1 person liked this |rociboci

Enjoyed the review, but I would like to see the mid-range GPUs tested on a mid-range CPU. The current setup is not representative of what users have. The GPU driver overhead is an important factor, especially for older systems.

1 person liked this | Puiu

Puiu

Enjoyed the review, but I would like to see the mid-range GPUs tested on a mid-range CPU. The current setup is not representative of what users have. The GPU driver overhead is an important factor, especially for older systems.

Even on a mid range CPU you'll hit the GPU limit before the CPU limit with these 2 cards. You would have to go for something cheaper than an R5 1600 to see a a very small difference.

1 person liked this |NightAntilli

I would never ever buy or promote the 1060 3GB due to its naming scheme. And yes, to me FreeSync is important enough to choose the RX 570 over the GTX 1060 3GB.

Additionally, it has 3GB only. The RX 570 4GB will likely fair better at 1440p than the 1060 3GB. Would love to see this tested actually.

I would never ever buy or promote the 1060 3GB due to its naming scheme. And yes, to me FreeSync is important enough to choose the RX 570 over the GTX 1060 3GB.

Additionally, it has 3GB only. The RX 570 4GB will likely fair better at 1440p than the 1060 3GB. Would love to see this tested actually.

Because running a 1080p card on a 2K panel seems like a good idea. Here's another one, get an Audi S8 and stick it with a LPG system..... The naming scheme .... again with that non-sense who gives a rat's ass what's it called? They could've named their whole line-up GTX 1080 and add letters to the end of it for all intents and purposes. I never heard someone cry wolf because he couldn't tell the 1060 6GB from the 1060 3GB...... The Freesync is a good argument if you can grab a decent enough panel. Other than that both perform the same despite one having 1 GB less vram that will "help" you in getting to that 30 FPS "sweetspot" for 2k and up panels ... please....

Anywho you can't go wrong with either of them if you're on a tight enough budget for a decent 1080p card

1 person liked this | dirtyferret

dirtyferret

But, but, but what about those of us that don't understand how RAM on a video cards work and/or how certain in game settings are nothing more then ram hogs?? We need the comfort of future proofing with more RAM, not factual evidence of actual in game performance.

GreenNova343

Enjoyed the review, but I would like to see the mid-range GPUs tested on a mid-range CPU. The current setup is not representative of what users have. The GPU driver overhead is an important factor, especially for older systems.

Which will give you 1 of 2 results:

1. Both GPUs will see slightly lower performance, but the same percentage difference between the two, so the final conclusion will be identical; or,

2. Both GPUs will see identical performance (because of both being limited by the "mid-range" CPU), making the final conclusion even more of a "pick the GPU based on the game(s) you're going to play", since their perceived performance & price will be identical.

Additionally, it has 3GB only. The RX 570 4GB will likely fair better at 1440p than the 1060 3GB. Would love to see this tested actually.

1. wrong, the 570 fairs the same at 1440p as 1080p. As Steve stated it's all about the game

2. there is a thing called google and tech review web sites that review these cards, they all post those results...

see links below, the 570 is a rebranded 470 with a slight OC

[link]

[link]

I would never ever buy or promote the 1060 3GB due to its naming scheme. And yes, to me FreeSync is important enough to choose the RX 570 over the GTX 1060 3GB.

At least with the 1060 3GB the naming scheme has indicated a change in specifications unlike the GTX 1030. A 10% reduction in cuda cores is more than offset with the 20% reduction in (MSRP) price.

Freesync is about the only reason to grab the 570 assuming the same price. I'm considering a switch to AMD when the next GEN drops just for the feature.

Additionally, it has 3GB only. The RX 570 4GB will likely fair better at 1440p than the 1060 3GB. Would love to see this tested actually.

As others have stated both cards are more suited to 1080p gaming, unless you're referring to playing lighter e-sports titles and not AAA.

I would never ever buy or promote the 1060 3GB due to its naming scheme. And yes, to me FreeSync is important enough to choose the RX 570 over the GTX 1060 3GB.

Additionally, it has 3GB only. The RX 570 4GB will likely fair better at 1440p than the 1060 3GB. Would love to see this tested actually.

Steve tested the two cards last year across 29 games at 1080p and 1440p.

[link]

Apart from the anomalous result for Resident Evil, that testing produced a similar outcome to this recent analysis at 1080p, and showed that the 1440p results paralleled the 1080p performance. Meaning, there wasn't an instance where the 3GB choked at 1440p and the 4GB was demonstrably better (apart from Deus Ex, which yielded fps minimums below 30 on both cards).

1 person liked this |NightAntilli

Fair enough.

Edit; After looking at the games individually... Yeah... The RX 570 is a better deal for 1440p, at least for DX12 games. DX11 is a toss-up.

2 people liked this |NightAntilli

Because running a 1080p card on a 2K panel seems like a good idea. Here's another one, get an Audi S8 and stick it with a LPG system.....

Always funny how using ensuring there's no bottleneck for a CPU by using 720p, a resolution that no one would likely use any high end gaming CPU for, is considered a good practice, but using a 1440p resolution for a 1080p GPU to avoid bottlenecks suddenly is stupid... Uhuh...

The naming scheme .... again with that non-sense who gives a rat's *** what's it called? They could've named their whole line-up GTX 1080 and add letters to the end of it for all intents and purposes. I never heard someone cry wolf because he couldn't tell the 1060 6GB from the 1060 3GB......

It's customer deception, and I don't stand for it. If you want to support that, that's on you. AMD is also guilty of something similar with their RX 560. Don't recommend anyone buy that one either... Nor any 1050 Ti due to the DDR4 deception.

The Freesync is a good argument if you can grab a decent enough panel. Other than that both perform the same despite one having 1 GB less vram that will "help" you in getting to that 30 FPS "sweetspot" for 2k and up panels ... please....

Anywho you can't go wrong with either of them if you're on a tight enough budget for a decent 1080p card

This is true I guess.

3 people liked this | Squid Surprise

Squid Surprise

I dispute these results! Everyone knows that AMD cards age far better than Nvidia cards!! How is it possible that Nvidia's lead has grown after a year?!? I still recommend the AMD because in 5 years, it will have 1080 performance! /sarcasm

dj2017

For someone who needs a $200-$250 graphics card to pass 12-18 months, the GTX is the obvious option.

For someone who is going to hold it for as long as possible, 3+ years, the 570 could be a better bet. That extra GB could become very important if we don't see more 3GB cards in the market from Nvidia, to keep programmers optimizing for that amount of memory.

1 person liked this | Azshadi

Azshadi

If it's a good idea to test the CPU that doesn't mean it's a good one to do GPUs. First of all ANY game engine has limit on how many FPS that can thing can crank out. Second of all if a card barely hits 60 FPS in 1080p would you test it in 1440p? Seeing that all that is 1440p is high refresh rate? Ever tried to limit the fps to 60 on a 144hz panel to see if there's any difference between 60 and 144? if the damn thing is "playable" at full HD care to take a guess what happens when you push the resolution up? Also you keep forgetting the fact that the GPU tends to bottleneck the system far faster than a CPU ever could since 90% of the games are GPU bound.

Heck no, the label says clearly 3GB 6GB. Even if you didn't see a computer part in your whole life-time you can tell that 3>6 and you can also notice the one that ends with 6 has a higher price.... I swear to god ever since people in America started suing companies because they are drool dripping morons and try to dry their cats in the microwave because it didn't say so in the instruction book everyone is quickly passing judgement on stuff that should be common sense... please... it's written on the box you know what you're buying end of story and a company shouldn't be taken to the cleaners because they're too dumb to read a 200 word article or read the damn label on the box.

1 person liked this | Squid Surprise

Squid Surprise

Um.... we do know that 1080p is basically the same thing as 2k, right?

1 person liked this | Dimitrios

Dimitrios

For someone who needs a $200-$250 graphics card to pass 12-18 months, the GTX is the obvious option.

For someone who is going to hold it for as long as possible, 3+ years, the 570 could be a better bet. That extra GB could become very important if we don't see more 3GB cards in the market from Nvidia, to keep programmers optimizing for that amount of memory.

Nope. With how Nvidia drivers are going vs AMD, I would stick with AMD now and the long run. UNLESS Nvidia changes their act with drivers I never had a problem since the old 7700 series days. I have the better version of the AMD RX560 4GB with all the shaders and extra power pin connector over clocked and have drivers from October and no issues gaming at 1080P. Here is some proof AMD drivers are better then Nvidia. [link]

2 people liked this |Benjiwenji

The naming scheme .... again with that non-sense who gives a rat's ass what's it called? They could've named their whole line-up GTX 1080 and add letters to the end of it for all intents and purposes. I never heard someone cry wolf because he couldn't tell the 1060 6GB from the 1060 3GB......

Other than that both perform the same despite one having 1 GB less vram that will "help" you in getting to that 30 FPS "sweetspot" for 2k and up panels ... please....

Please your research on these cards before make false claims. It is simply false to imply the only difference between the 1060 3gb and 6gb variant is in the VRAM, when in fact the 3 gb version has a disabled SM, 10% CUDA cores reduction, and the halved VRAM. Therefore, the 1060 3gb and 1060 6gb are different GPUs and ought to be named differently.

HardReset

I dispute these results! Everyone knows that AMD cards age far better than Nvidia cards!! How is it possible that Nvidia's lead has grown after a year?!? I still recommend the AMD because in 5 years, it will have 1080 performance! /sarcasm

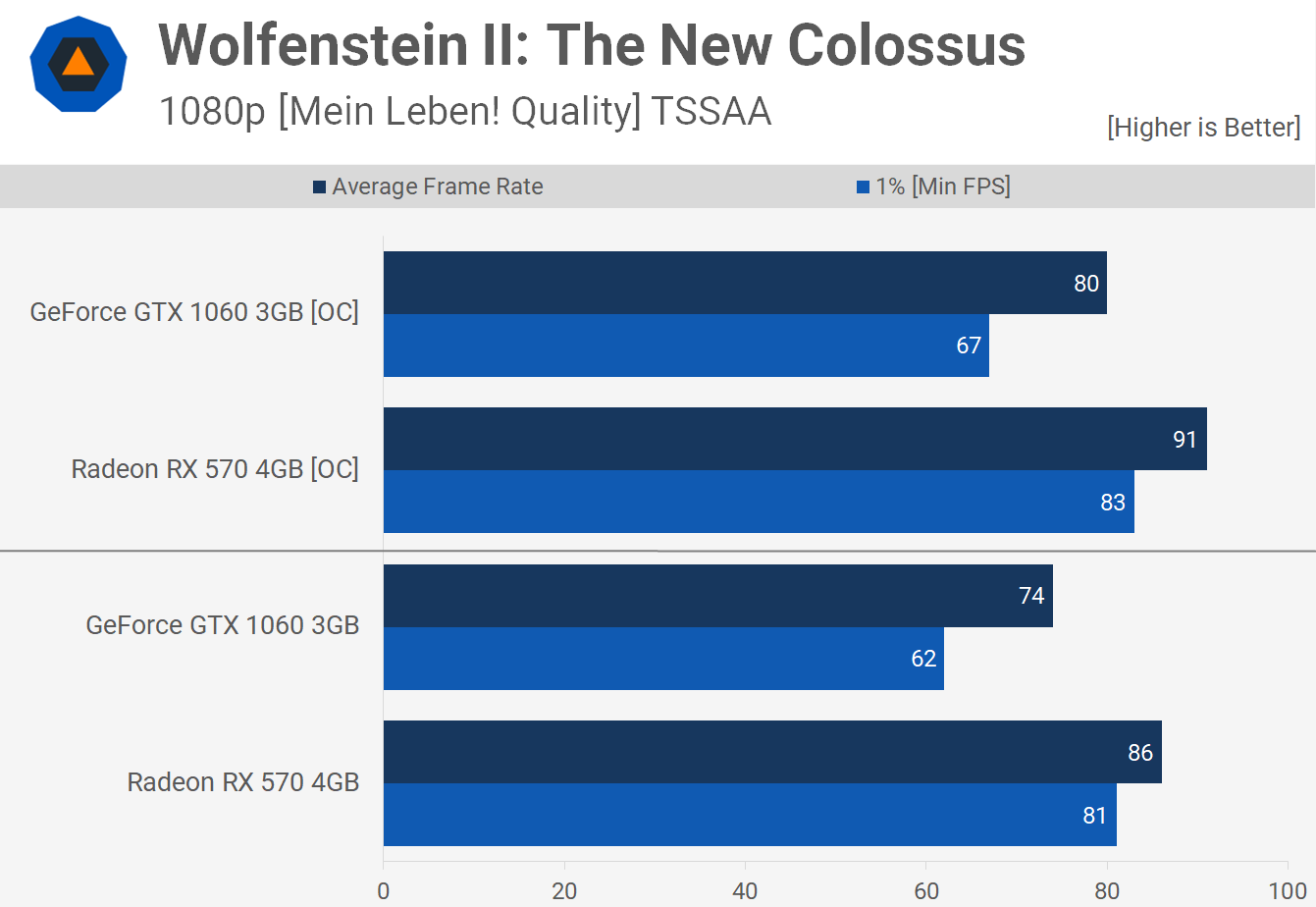

Not surprising as most games are quite old, like GTA V that is almost 5 years old and Far Cry 5 that's almost 6 years old. About only game that can be considered modern is Wolfenstein II New Colossus. There are also total craps like AC Origins with double DRM and Battlegrounds that is still in beta state.

And before anyone comments like Far Cry 5 launched this year, it uses exactly same engine as Far Cry 3 uses. And Far Cry 3 is 2012 title.

1 person liked this | Azshadi

Azshadi

Please your research on these cards before make false claims. It is simply false to imply the only difference between the 1060 3gb and 6gb variant is in the VRAM, when in fact the 3 gb version has a disabled SM, 10% CUDA cores reduction, and the halved VRAM. Therefore, the 1060 3gb and 1060 6gb are different GPUs and ought to be named differently.

First of all I never said that that the only difference is 6 and 3. I was trying to put it on the level of the "average not so tech savy" consumer.... Do you actually think that people will start looking for disabled SM's if they don't even know what the term is?

Sigh... No they could've named it "carrots" for all I care. Do you think I do not know that bit of information? And NO they are the same gpu - GP106. Just in case it wasn't clear before they are officially named GTX 1060 6GB and 3GB so there would be your difference for name's sake (https://en.wikipedia.org/wiki/GeForce_10_series). There isn't a box out there that's just branded "GTX 1060" (if you don't count the china AIB ones although didn't see those w/o the 6 5 or 3 for that matter). I didn't say it's a good branding name but there is a difference and unless people can't read and suddenly start buying GPUs the argument that there is little difference between the names is very far fetched.

1 person liked this |SamNietzsche

I see where you're coming from here. I think appropriate nomenclature would be 1060 (1060 3gb) and 1060 ti (1060 5/6gb) but starting a fight with someone isn't helping them see that view point. Being the only tech savvy person in my household, I can definitely agree with the argument that even a lesser informed individual would understand that the 1060 6gb would be the better card. Boycotting a specific card because you don't agree with a minor performance/price reduction is petty. Azshadi has been pretty patient with their side of the argument, and therefore looks more competent. C'mon man, don't get up in arms over this. You're making red team fans look bad.

1 person liked this |NightAntilli

So? APIs also have a limit to how many draw calls they can make. Does that somehow negate the lower res CPU test?

Did anyone stop testing a CPU at 720p because it is already a limit at 1080p?

That actually depends on a lot of factors. The type of game, the API, the resolution, the AA settings, the power of the card, the drivers, and so on and so on. Saying that a GPU tends to bottleneck faster than a CPU is an empty statement.

Oh so you don't know that the GTX 1060 3GB is a cut down chip?

The difference implies that only the memory is the difference, and that is the issue. It's completely irrelevant that the original GPU is the same. It has disabled shaders, and THAT is what matters. It's the reason the GTX 1070, GTX 1070 Ti and GTX 1080 have different names. Imagine if the 1080 Ti and the 1080 were both named GTX 1080, but one was called the GTX 1080 11GB and one was called the GTX 1080 8GB, and the price was a mere $50 difference. Don't you see the problem with this? If you don't, then you are the problem.

If it's a good idea to test the CPU that doesn't mean it's a good one to do GPUs.

It's the EXACT SAME principle. I don't see how anyone can argue this.

First of all ANY game engine has limit on how many FPS that can thing can crank out.

Second of all if a card barely hits 60 FPS in 1080p would you test it in 1440p?

Seeing that all that is 1440p is high refresh rate? Ever tried to limit the fps to 60 on a 144hz panel to see if there's any difference between 60 and 144? if the damn thing is "playable" at full HD care to take a guess what happens when you push the resolution up? Also you keep forgetting the fact that the GPU tends to bottleneck the system far faster than a CPU ever could since 90% of the games are GPU bound.

First of all I never said that that the only difference is 6 and 3. I was trying to put it on the level of the "average not so tech savy" consumer.... Do you actually think that people will start looking for disabled SM's if they don't even know what the term is?

Let me guess. You just looked that up to save face didn't you?

In any case, that is EXACTLY the problem. The average not so tech savy consumer does not know the details, and supporting the likes of these cards is basically encouraging nVidia to lie to their unknowing customers. You know, just like happened with the GTX 970 and the 3.5 GB fiasco. But yeah, you don't care because you're too busy adoring nVidia.

And spare me the argument that the 1080 Ti and 1080 are different chips. Like you yourself said, the average not so tech savy consumer is not going to look up whether they have different chips or not.

"Boycotting a specific card because you don't agree with a minor performance/price reduction is petty."

Seriously? That is NOT the issue at all. No one is boycotting the GTX 1070 Ti because it is a minor performance/price reduction compared to the 1080. Don't distort the facts.

Azshadi has not been 'patient'. He has been passive-aggressive and condescending. And the fact that you support him says it all. Especially that you have to bring in shaming tactics with regards to team colors and whatnot.

Benjiwenji was correct in his statement. That Azshadi is capable of googling to try and save face after the fact does not change things, nor does it warrant you to tell BenjiWenji that he's making a particular demographic look bad, when he posted only facts.

2 people liked this |sucker

As long naive peeps keep buying this overprice rubbish, they just keep selling it. Takes nvidia for example . Why integrated g-sync directly into the graphic card when we could just add a card into a monitor and charge double!

2 people liked this | Azshadi

Azshadi

Stuff you said

Thank you sir you made me laugh more than the comedy specials on Netfilx,now I know I'm wasting my breath,

A. How the heck is the exact same principle since games become MORE GPU DEMANDING over time? Take the most powerful CPU of this time and run it with a 560 guess what? It's going to be the same pile of crap no matter what CPU you throw at it, that's mainly because the GPU is to weak, If you would like to see more useless benchmarking for a card that is aimed at 1080p because at some point you might want to hook a 4k panel on it, Google, no one in their right minds that spends $250 on a GPU would think "Hey I wonder how it does 4k since it barely spits out 60 fps on 1080p". Why on God's green Earth do you think 720p tests are used? newsflash to eliminate the GPU dependency. What bottleneck are you trying to expose when running a higher resolution other than the GPU itself? What's your opinion on CPUs performing the same at higher resolutions? How would a GPU test at 4k would make any difference for a 1080p card? You are implying that testing 2 different parts that have different roles in the same test is a good idea... it's not. Although you might think that having more Vram is a good thing if you want to go to a higher resolution, while that might be true in part, you have to take into account if the chip can push the that amount of Vram, if this wasn't a thing we would all be gaming on 8800 GT's with 12 GB of Vram.... both of these cards could have 240 GB of HBM they won't be able to use them.... That's why there's little to no difference between the 580 4gb and the 8gb version in higher resolutions, does it help? Yes, does it make the whole experience better? Hell no.

B. Please educate me more on how a CPU becomes the bottleneck faster than a GPU does. Here's a hint those APIs you quoted and have no clue about at this point tend to lift the weight off the CPU and focus it on the GPU. Here's another "empty statement" which part requires regular updates more in let's say 1 year in order for it to perform ok in a title? Also please educate me more how can a Ivy Bridge part still does not bottleneck the heck out of a 1080Ti.

C. Saving face? Hardly, it was plastered all over the tech sites, no one could miss it, furthermore no one brought up the cut down version, the whole debate started with the naming convention being "hard to get", but then again I'm wasting my breath since I can't convince you otherwise :).If you can't follow a conversation, stop having it (this is me being condescending). All sarcasm aside as long as it's listed somewhere everything is fair game, all you have to do is read, the specs are on the manufacturer's website, the retailer's website, take your pick, you don't have to have a degree in computer science to figure out if a number is higher (CUDA cores) it should perform better.

D. "Adoring nVidia" this would be the time that I would really need to be condescending. Here's the deal I don't care if BOSCH is making my GPU as long as it fits my needs, this wasn't the subject to begin with anyway, stop moving the goal posts. The 3.5 GB was a fiasco and guess what? they got sued and lost :D, that was deceptive marketing according to the court, they paid up, this is a whole different scenario it's not even remotely close to what you're implying. As long as your point is thrown out of court it means nothing.

E. Dude seriously ... Does the box say 6GB? Yes, it does, deal with it, you know what you're buying it's common sense.

Last but not least the average non tech savy consumer's judgement is way easier than you might think, the more expensive the part the better the performance that's why people buy i9s and Threadrippers and play Minecraft on them....

NightAntilli

Don't games become more cpu demanding over time too? Why would anyone upgrade any CPU if this wasn't the case?

Same as someone will not spend $400 on a CPU to play at 720p. You still don't get it do you? Do yourself a favor and stop arguing.

What bottleneck are you trying to expose when running a lower resolution other than the CPU itself?

It's most likely a GPU bottleneck, although it can be a memory (both system and GPU), API, engine... Basically it can be anything that is not a CPU bottleneck.

Uhm... We're testing graphics cards, not only GPUs. Testing the limitations of the memory is valid, considering over time memory and bandwidth use grow over time.

A graphics card has one specific role.

This is not untrue. Except it generally does make the experience better since the frametimes are superior, but let's leave that out.

Yes... For what? Oh right, to remove the CPU limit. You just answered your own question.

Try playing at 1080p with a 1080Ti. I bet the newer CPUs do a lot better.

So basically you expect everyone to go in depth before buying a product. It's fine if someone expects to get a large pizza but gets a medium one instead. Got it.

They paid? Not really. The amount they had to pay pales in comparison to what they gained from the card. Additionally, it was only valid to the US, and yes, the US is not the only place in the world.

And then you talk about moving goal posts...

If that was the case, the majority of sold PC parts would be high end. They aren't.

Thank you sir you made me laugh more than the comedy specials on Netfilx,now I know I'm wasting my breath,

There you go again with the arrogance, scorn and condescending attitude. It doesn't get you any points.

A. How the heck is the exact same principle since games become MORE GPU DEMANDING over time?

Take the most powerful CPU of this time and run it with a 560 guess what? It's going to be the same pile of crap no matter what CPU you throw at it, that's mainly because the GPU is to weak, If you would like to see more useless benchmarking for a card that is aimed at 1080p because at some point you might want to hook a 4k panel on it, Google, no one in their right minds that spends $250 on a GPU would think "Hey I wonder how it does 4k since it barely spits out 60 fps on 1080p".

Why on God's green Earth do you think 720p tests are used? newsflash to eliminate the GPU dependency. What bottleneck are you trying to expose when running a higher resolution other than the GPU itself?

What's your opinion on CPUs performing the same at higher resolutions?

How would a GPU test at 4k would make any difference for a 1080p card?

You are implying that testing 2 different parts that have different roles in the same test is a good idea... it's not.

B. Please educate me more on how a CPU becomes the bottleneck faster than a GPU does. Here's a hint those APIs you quoted and have no clue about at this point tend to lift the weight off the CPU and focus it on the GPU.

Here's another "empty statement" which part requires regular updates more in let's say 1 year in order for it to perform ok in a title? Also please educate me more how can a Ivy Bridge part still does not bottleneck the heck out of a 1080Ti.

E. Dude seriously ... Does the box say 6GB? Yes, it does, deal with it, you know what you're buying it's common sense.

Last but not least the average non tech savy consumer's judgement is way easier than you might think, the more expensive the part the better the performance that's why people buy i9s and Threadrippers and play Minecraft on them....

3 people liked this |SamNietzsche

Note that at the time of my post, Azshadi had not yet expressed any undeserved sarcasm. The "googling to try and save face" had not yet been established. The boycotting was literally the original source of the argument. You were ultimately against the 1060 3gb specifically for it's naming scheme, not it's performance. In the end, yes it's customer misinformation to label it a full 1060, or at least to not differentiate the 6gb as "1060ti" just like it's customer misinformation to declare the gtx 970 had 4gb of Ddr5 memory when it had only 3.5. Ultimately that point is irrelevant in both cases as the card performs perfectly in line with Nvidias specifications.

Kytetiger

My 2cents:

I bought a GTX 1060 3GB last summer, knowingly what were at hand (less mem, less cuda, lower clock).

But I had other criterion in mind than just perf, cost and mem:

- smaller consumption because I play mostly light games (heaviest is TitanFall2)

- quieter

- price in my region. Last year, prices were crazy but I needed a card. If I could have waited 1 more year, I would have chosen differently.

- CUDA (for video editing)

But I'm totally with you. The naming scheme is awful.

0 Comments